Serverless API Development on AWS with TypeScript - Part 3

Data Access Patterns Cont'd, Handling Events from DynamoDB Streams and EventBridge, Events Filtering and SMS notification via Amazon SNS

Introduction

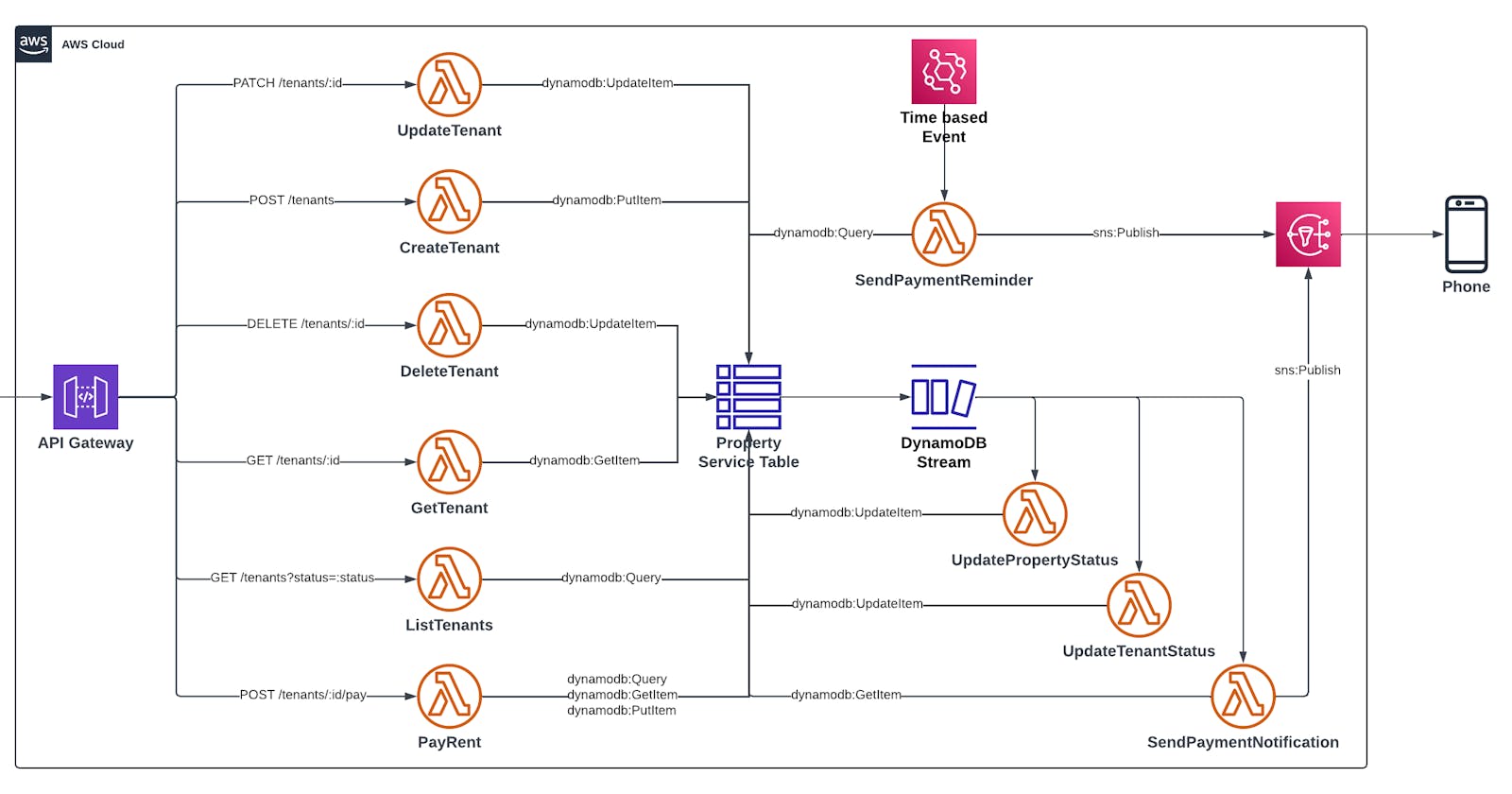

In the last part of this series, we will go deeper into Data Access patterns, how they not only determine how we query a DynamoDB table but how they also determine the result of a query. We will also look at a fantastic feature of DynamoDB called Streams. DynamoDB Streams enables event notification for changes in a DynamoDB table. We will also look at Lambda events that have a different event source from API Gateway and how to filter them so that our handler function gets only what our business logic required. Finally, we will be looking at Amazon SNS and how we can use it to send SMS to a customer. All of this is within the context of the Tenant Service Project.

For a quick recap, in Part 1 we saw the setting up of the project and the necessary configurations when working with the Serverless Framework. Part 2 discusses the entities in the project with a focus on the Tenant entity. It also discusses the setup of the DynamoDB table which is akin to the Create Table statement along with its Global Secondary Indexes.

Data Access Patterns

As already established in Part 2, the primary keys in DynamoDB are the basis for Data Access patterns; how data is accessed. These patterns do not only affect how data is retrieved from a DynamoDB table but also determine what data is retrieved.

Sample Keys in Tenant Service

The code snippet below shows different keys that form the Data Access Patterns in the Tenant Service. Recall that in Part 2 also, the default primary key returns a tenant record only because of how it was designed — it queries the table using two primary key attributes; PK and SK. When we use either GSI1PK or GSI2PK, we are making use of a differentprimary key which is a different access pattern from the default primary key. This access pattern certainly has a different primary hence the difference in the resultset.

// tenant default primary key - composite primary key

// Retrieves a Tenant record ONLY

{

PK: `tenant#id=724dab0a-6adb-4d64-91fb-a95b492d9120`,

SK: `profile#id=724dab0a-6adb-4d64-91fb-a95b492d9120`

}

// Tenant GSI keys

// GSI1PK: Retrieves tenant and corresponding record with same GSI1PK

// GSI2PK: Retrieves all tenants

{

GSI1PK: `tenant#id=724dab0a-6adb-4d64-91fb-a95b492d9120`,

GSI2PK: `type#Tenant`

}

// Payment GSI1 key

// Retrieve all payments for a tenant

{

GSI1PK: `tenant#id=724dab0a-6adb-4d64-91fb-a95b492d9120`

}

// Property default primary key - composite primary key

// Retrieves a Property record ONLY

{

PK: `property#id=463fb471-e199-4375-b745-1c17cbbc1931`,

SK: `property#id=463fb471-e199-4375-b745-1c17cbbc1931`

}

// Property GSI1 key

// Retrieves all properties

{

GSI1PK: `type#Property`

}

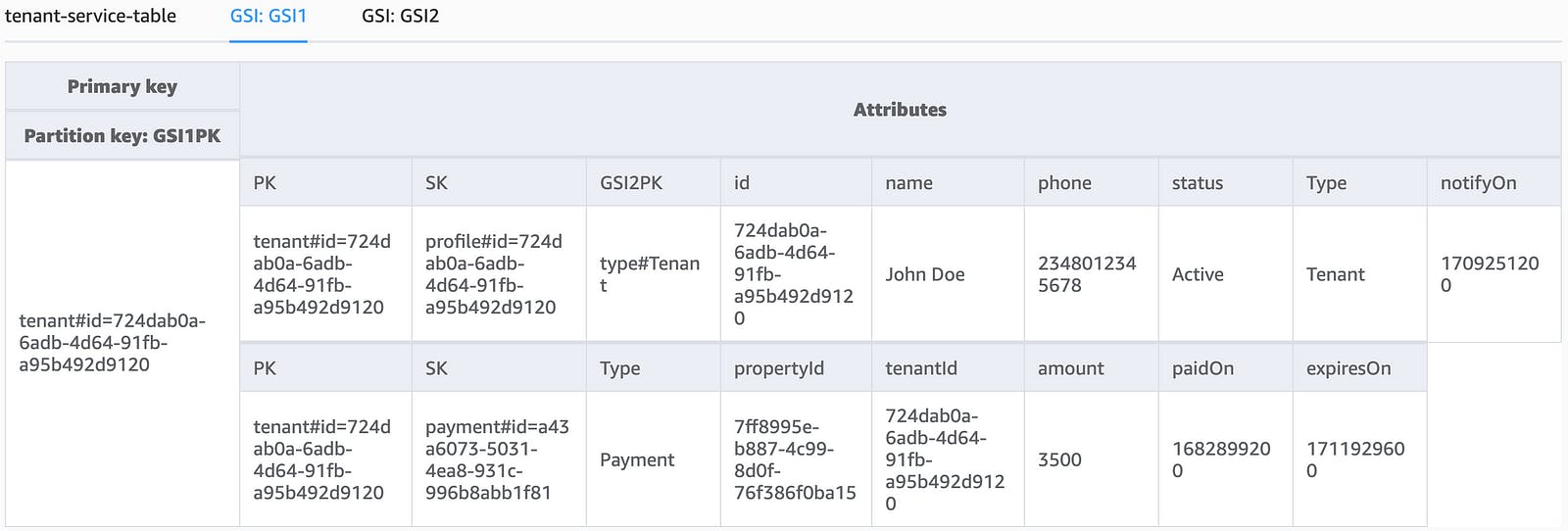

A fundamental concept that forms the basis of the single-table design is what can be described as a hierarchical relationship between records. A quick example of this concept in the Tenant Service is that every payment record must have a tenant record as its root. This means we can get a tenant and all payment records for that tenant in one call, depending on how we query the table. When a table is queried, you can imagine the resultset as having to use a GROUP BYclause so that you can see all records irrespective of the entity type, that have the same value for the attribute you are querying by — in this case, a GSI key. To illustrate this, take a look at the snapshot below.

The snapshot above shows the GSI1 tab selected which displays the records available for the GSI index: GSI1. Every record that has the GSI1PK attribute is listed as well. We can see that under the attributes, we have entries for bothtenant and payment entities. Recall that for every GSI, there has to be a corresponding primary key. We have in this case, GSI1PKas theprimary key — it is the partition key as well. The records displayed have a similar GSI1PK value and are therefore grouped on this value. See below how the GSI1PK keys are formed — they surely have a similar definition.

// payment.ts

static BuildGSIKeys(tenantId: string) {

return {

GSI1PK: `tenant#id=${tenantId}`

}

}

// tenant.ts

static BuildGSIKeys(prop?: {id: string}) { // given that id is not undefined

return {

GSI1PK: `tenant#id=${prop?.id}`

// ...

};

}

This, therefore, means that if the indexGSI1 is queried using a GSI1PK that matches a GSI1PK entry in the table, we will get the records for the tenant with that GSI1PK and all corresponding payment records. With this, it becomes very obvious that Data Access Patterns via GSI is a critical factor which not only determines how a DynamoDB table is queried but also determines the resultset that is returned. The beautiful thing about using DynamoDB here is the fact that a single database call is what is needed to get the records as explained — this is void of a table join as all the records reside in one table.

DynamoDB Streams

When a Tenant pays for a property, it makes sense to update the property’s status as NotAvailable so that it will not be listed otherwise. This also applies to a tenant such that if the tenant is making payment for the first time, the status attribute will initially be NotActive. The tenant’sstatus attribute will need to be updated as well to indicate that this is an active tenant. Given a table design where we have data saved in different tables, after saving the payment record, we will have to ensure that the save-payment operation succeeded before we can go on to update the property or tenant record. Well, DynamoDB has a better way of handling that using Streams.

DynamoDB Streams is a feature that generates events when data in a DynamoDB table changes. This could either be an INSERT, DELETE, or UPDATE operations. DynamoDB generates this event in near real-time, containing those changes in the table. To make use of this feature, DynamoDB Streams is enabled in the Tenant Service table resource definition by setting StreamViewType which is under the StreamSpecification key, as shown below.

StreamSpecification:

StreamViewType: NEW_AND_OLD_IMAGES

You can then specify when the event should be triggered given the scenarios:

when a new record is added to the table:

NEW_IMAGEwhen an update is made to an existing record (including deletion):

OLD_IMAGEwhen either a new record is added or an existing record is updated(including deletion):

NEW_AND_OLD_IMAGES

Thankfully, AWS Lambda is designed such that this event can be used as a trigger for Lambda functions.

With Streams enabled, we can be notified by DynamoDB when a payment record is saved to the table so that both the tenant and propertystatus attributes are updated accordingly.

The Lambda function UpdatePropertyStatus is the event handler for updating the property status attribute when a payment is saved. It listens for an event from DynamoDB Streams for new a record entry of a payment entity and then performs the update on the property. How does it know the property that was paid for? You may ask. That is handled given that every payment has an attribute containing the attribute propertyId against which the payment was made. A similar setup is available for updating the tenant attribute status — the UpdateTenantStatus Lambda function.

UpdatePropertyStatus:

handler: ./lambda/UpdatePropertyStatus/index.main

description: Updates the status of a property after a successful payment

memorySize: 128

timeout: 10

iamRoleStatements:

- Effect: Allow

Action:

- dynamodb:UpdateItem

Resource:

- ${self:custom.DatabaseTable.arn}

events:

- stream:

type: dynamodb

arn: ${self:custom.DatabaseTable.streamArn}

filterPatterns:

- eventName: [INSERT]

dynamodb:

NewImage:

Type:

S: [Payment]

The code snippet above shows the configuration for the UpdatePropertyStatus Lambda function — the configuration is where we set the function trigger. Taking a look at the events key, you will notice the entry is set to stream — this refers to the event source of the function. The type key defines the type of stream which is DynamoDB in this case and arn that defines the source of the DynamoDB Stream — the Tenant service table.

The Lambda function below is the UpdatePropertyStatus handler. It takes in a DynamoDBStream event payload as its argument. This event payload contains record(s) that were published when the corresponding event was raised in the table. In this payload, we then deconstruct the NewImage which is in the dynamodb field to get the newly saved payment record. And because we are certain that it is a payment record entity, doing a cast with the type-casting construct as Payment comes without worry. But how is that? By event filtering.

// ...imports

export const main = async (event: DynamoDBStreamEvent) => {

const { NewImage }: { [key: string]: any } = event.Records[0].dynamodb;

const { propertyId } = unmarshall(NewImage) as Payment;

await ddbDocClient.send(new UpdateCommand({

Key: Property.BuildPK(propertyId),

TableName: process.env.TENANT_TABLE_NAME,

UpdateExpression: 'SET #status = :status',

ExpressionAttributeValues: {

':status': PropertyStatus.NotAvailable

},

ExpressionAttributeNames: {

'#status': 'status'

}

}));

}

DynamoDB Event Filtering

It is important to note that Streams is enabled on a per-table basis. What this means in effect is that the DynamoDB table will generate and raise an event whenever there is a change in the table. Of course, this is depending on the usedStreamViewType. For example, if a new Tenant or a Property is saved, the table will publish that event as a record-added event, not a payment-record-added or property-record-added event. This means, any Lambda functions listening for some kind of record-added event from DynamoDB Streams will be triggered. We can filter DynamoDB events such that a Lambda function will only be triggered when certain criteria are fulfilled. For the Tenant service, we want that the UpdatePropertyStatus function will only be triggered when a new payment record is saved to the table. Applying this event filter will help us guard against unwanted concurrency issues where all functions listening for some kind of record-added events will be triggered. This is achieved using the filterPatterns key.

events:

- stream:

type: dynamodb

arn: ${self:custom.DatabaseTable.streamArn}

filterPatterns:

- eventName: [INSERT]

dynamodb:

NewImage:

Type:

S: [Payment]

The snippet above is a part of the Lambda configuration for UpdatePropertyStatus. This configuration defines through the filterPatterns key that the Lambda function should only be triggered if the event via the eventName is an INSERToperation. TheNewImage represents the new record that was just saved to the table. The filter expression also defines ( in addition to the INSERT operation) that the Type attribute of the saved record should be evaluated. If the attribute is of type String hence the S and the value is equal to “Payment”, then the function can be triggered. With this filter condition in place, we can be sure that the Lambda function will only be triggered when a new payment record is saved to the table. Note that the Type attribute was added as part of the entity definition. See Part 2 of this series for details.

Time-Based Events with EventBridge

The payment entity has an expiresOn attribute that holds the value for the expiration of the payment. It is calculated when a payment object is created. After a payment record is saved, the UpdateTenantStatus function updates the status attribute of the tenant and sets the value of the attribute notifyOn. This attribute stores the date when the tenant will be notified about the coming expiration of rent — this is set to 1 month prior. Given this requirement, there needs to be a monthly pooling of the tenants’ records that meet the criteria, so that they can be notified of rent expiration in the coming month.

In the architecture diagram, there is a SendPaymentReminder function that is triggered by the Amazon EventBridge. EventBridge is set up to trigger the SendPaymentReminder function every month, at a certain time using cron-style scheduling.

SendPaymentReminder:

handler: ./lambda/SendPaymentReminder/index.main

description: Notifies tenants via SMS that their rent will expire in the coming month.

memorySize: 512

timeout: 10

environment:

SENDER_ID: 'LandLord'

iamRoleStatements:

- Effect: Allow

Action:

- dynamodb:Query

- sns:Publish

Resource:

- !Join ["/", ["${self:custom.DatabaseTable.arn}", "index", "*"]]

- '*'

events:

- schedule: cron(0 12 1W * ? *)

Above is the configuration for the SendPaymentReminder function. Our focus here is the events key. It is set to schedule and the value uses cron syntax to schedule the function to run at noon on the 1st day of the 1st week of every month. Let’s take a look at the event handler for this EventBridge-triggered event.

// ...imports

export const main = async () => {

const { year, month } = DateTime.now();

const date = DateTime.utc(year, month).toUnixInteger();

const { GSI2PK } = Tenant.BuildGSIKeys();

const response = await ddbDocClient.send(new QueryCommand({

IndexName: GSIs.GSI2,

TableName: process.env.TENANT_TABLE_NAME,

KeyConditionExpression: '#gsi2pk = :gsi2pk',

FilterExpression: '#notifyOn = :notifyOn AND #status = :status',

ExpressionAttributeValues: {

':gsi2pk': GSI2PK,

':notifyOn': date,

':status': TenantStatus.Active

},

ProjectionExpression: '#phone, #name',

ExpressionAttributeNames: {

'#name': 'name',

'#phone': 'phone',

'#status': 'status',

'#notifyOn': 'notifyOn',

'#gsi2pk': 'GSI2PK',

}

}));

response.Items.forEach(item => {

const { name, phone } = item as Pick<Tenant, 'name' | 'phone'>;

const message = `Hi ${name}, I hope you're doing great. This is a gentle reminder that your rent will expire next month. Please endeavour to pay in due time`

sendSMS(message, phone, process.env.SENDER_ID)

});

};

In this very simple invocation, there is no need for the event argument hence an empty argument list for the main function. When this function is triggered, some very simple calculations of the date and building of the GSI2PK key and then a query to DynamoDB. If we have tenants that meet the criteria, an SMS is forwarded accordingly.

SMS with Amazon SNS

Sending SMS is easy using the Amazon SNS API. As with every managed service, we only need to provide the necessary configuration and we’re off this using the service. This assumes your account is no longer in sandbox mode else you must have to register and verify any phone number you intend to send an SMS.

In the Lambda configuration below, we specify the permissions needed to send SMS with the SNS API.

# SendPaymentReminder/config.yml - other portions omitted for brevity

iamRoleStatements:

- Effect: Allow

Action:

- sns:Publish

The current implementation of the Tenant service communicates to the tenants on two occasions. The first is after a payment is made, and the second is when a reminder about the rent’s expiration is due. At both times, the SNS is utilised to send an SMS to the tenants' phone number. In the simple code below, the sendSMS function takes in three arguments; message, phoneNumber and sender. These are used by the SNS API to forward the intended message to the tenant.

// ...imports

export const sendSMS = async (message: string, phoneNumber: string, sender: string) => {

await snsClient.send(new PublishCommand({

PhoneNumber: phoneNumber,

Message: message,

MessageAttributes: {

'AWS.SNS.SMS.SenderID': {

DataType: 'String',

StringValue: sender,

}

}

}));

}

Conclusion

In this article we talked about DynamoDB keys as the basis for Data Access Patterns, and how they can be a factor in determining the resultset of queries.

We also saw that DynamoDB Streams is a great feature and should be used where necessary. However, it is essential to apply a filter to the events so that the appropriate Lambda functions are triggered instead of having all functions that are listening to a particular source be triggered at the same time. The filter also helps us to avoid having several if conditions inside Lambda functions.

Understanding event types and the structure of their payload is key to manipulating the data. It is okay to log the event payload to CloudWatch to get an idea of this structure if the documentation is not clear enough.

This brings me to the conclusion of the series: Serverless API Development on AWS with TypeScript. I am glad to have started this series, and I believe it is presented in a way that is easy to understand.